Before You Build the AI Skyscraper, You Gotta Drain the Data Swamp

Y’all, let me paint you a picture. You get asked to hang a fancy new chandelier in the dining room. Sounds simple, right? But the second your foot hits the ladder, you spot a crack in the ceiling. That crack leads to a sagging beam. Before you know it, you realize you can’t hang that beautiful light fixture until you’ve shored up the whole dang foundation.

That is exactly what it feels like to be handed a data model that “just needs a few tweaks.” It’s never just a tweak, is it?

What’s wild is that these problems aren’t new. These are the same dragons we’ve been slaying in Business Intelligence for decades. But now, in the mad rush to sprinkle “AI” on everything, a whole lot of folks are forgetting you can’t build a skyscraper on a swamp. That shiny new AI chandelier won’t hang itself, and it certainly won’t stay up if the ceiling’s about to cave in. Before you ever get to the fancy algorithms, you have to get the data right.

When this “simple” request landed on my desk, it was the perfect chance for a deep dive. As a solo developer on this, I could go through it methodically, piece by piece, to understand its history and map out its future. I took a deep breath, cracked my knuckles, and started pulling on the threads.

Now, before we get into the nitty-gritty, a quick word to the wise. This story is based on a real project, but the specifics are fuzzier than a peach to protect everyone involved. The technical messes and the business wins are all real, but think of it as a parable from the data trenches.

Settle in, because we’re going to talk about the classic data gremlins that hide in plain sight and how a little foundational work can lead to a huge win. We’ll look at the actual numbers and see how these changes took a model from “bless its heart” to scarily accurate.

Wrangling the Data Gremlins

When I first dug in, the model’s data prep process in R took several hours to run. You can’t develop, test, or iterate when every run eats half your day. That headache was my way in. I could try to optimize the old R code, or I could use this as a chance to build a sturdier, modern process from the ground up.

Let’s be clear: tech debt like this doesn’t happen because people are bad at their jobs. It’s the byproduct of tight deadlines, shifting priorities, or just the messy reality of getting a project off the ground. This model was ready for its next architectural evolution, and it was the perfect time to move it to a more scalable platform.

The choice was easy. With everyone moving to centralized platforms like Snowflake, this was my chance to align the project with a modern data strategy. I decided to move all the data preparation to SQL. I know, I know, modern libraries can “push down” queries. But that still leaves your logic tangled up in a single script. The better pattern is to create a decoupled, reusable feature set right in Snowflake. This way, you have a clean, independent data asset the model can just read from. It’s a heck of a lot easier to manage, change, and even reuse later. It’s about playing the long game.

Once I started that migration, I realized the slow performance was just a symptom. The real juicy stuff was buried in the logic.

The “Choose Your Own Adventure” Dataset

First thing I found was a “Choose Your Own Adventure” dataset, thanks to a snapshot table. These tables are great, storing a new row for every record, every day. But if you just join to it without thinking, you get a row for every single day that record existed. This created a non-deterministic dataset. That is a cardinal sin in data science. You can’t trust a model if its inputs change every time you run the code.

Good news is, it’s an easy fix in modern SQL. Instead of a vague join, you use a window function to ask the database politely for the single most recent version of that record for the time you care about.

-- How to reliably get the latest record from a snapshot table

SELECT

*

FROM

daily_customer_snapshot

QUALIFY ROW_NUMBER() OVER (PARTITION BY customer_id ORDER BY snapshot_date DESC) = 1;Without that QUALIFY clause, you have a black box. You can’t explain why a customer got a certain score, because if you reran the process, you might get a different input. You can’t debug it, you can’t defend it, and you sure can’t trust it.

When the Code and the Comments Are in a Fight

This next one’s a classic. The comments describe a beautiful, logical intention, and then the code is off doing something else entirely. In this case, the code was trying to grab the most recent customer name. You might see something like this in R:

customer_updates %>%

group_by(customer_id) %>%

summarise(last_name = max(customer_name))This code is getting the name that’s last alphabetically, not chronologically. It’s a small mistake, but it’s a symptom of a bigger problem where the code and the common sense have parted ways.

A Primary Key is Forever

Another pattern I see all the time is joining on a field that can change, like a person’s name. It seems easy enough. But what happens when a customer gets married? Or a company rebrands? If you joined on that name, you’ve just orphaned their entire history. All their past orders and support tickets are now disconnected from their new record. Names change, but a good primary key is forever.

Re-Inventing the Flat Tire

And here’s one we’ve all seen. The code was rebuilding complex business logic from scratch, creating its own version of the truth. Most places have “golden” datasets curated by a data team that have been vetted, tested, and trusted. Ignoring them doesn’t just waste time; it creates conflicting answers that lead to chaos. It’s a textbook case for why a good data catalog is worth its weight in gold.

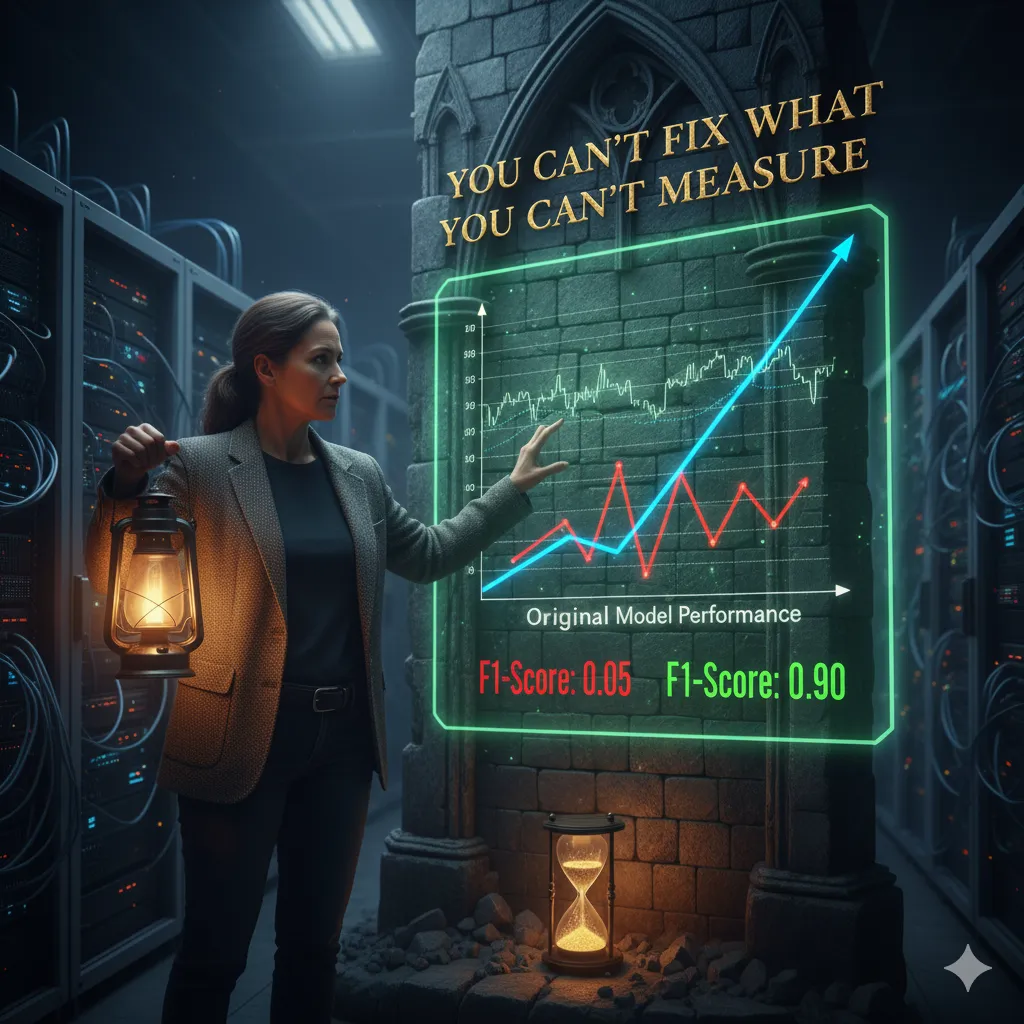

You Can’t Fix What You Can’t Measure

Before I could prove out my improvements, I hit a familiar wall: no backtesting framework. The model was producing a score, but there was no way to evaluate how it would have performed on historical data.

Alright, let’s just lay all our cards on the table. A predictive model without a backtesting strategy is just an expensive toy. You wouldn’t put a financial report in front of your CEO without auditing the numbers, so why would you trust a model’s output without testing it against the past? Building a proper backtesting process became my next priority. You can’t claim victory if you don’t know where you started.

Unlocking the Model’s True Potential

With a solid backtesting framework in place, I could finally put some numbers to the win. The impact of addressing these common data issues was massive.

- The Original Model: Accuracy of 87.3% and an F1-Score of 0.05.

- After Data Cleanup: Accuracy of 50.4% and an F1-Score of 0.48.

This is the classic accuracy paradox. The original model got its high accuracy by basically ignoring the small group of positive cases in an unbalanced dataset. The model’s Recall score was a whisper-thin 2.9%, meaning it was missing almost everything it was built to find. By implementing a data balancing technique like SMOTE, I forced the model to pay attention to the group we actually care about.

Getting the Question Right is Half the Battle

With the data foundation solid, it was time to bring it home. The model was looking at the world through a calendar-year lens when it needed to be looking at a customer’s unique yearly cycle. This was splitting a single customer’s story into two different years, making their features look much smaller than they were.

By shifting the logic to a rolling 12-month period for each customer, I finally aligned the model with the reality of the business. The results were astounding.

- The Final Model: Accuracy of 93.3% and an F1-Score of 0.90.

That’s not a typo. The final model was rebuilt in Python, and the next step is to deploy it using Snowpark for automated execution right inside Snowflake. I closed the loop, taking the project from a rickety script to a robust, production-ready asset.

But the win here wasn’t just the flashy score. It’s about solving the “future-me” problem: maintainability. The old R script was a black box. Now, the clean Python code in Snowpark is clear. I can read it, see the logic, and make changes confidently without needing a whole pot of coffee and a bottle of aspirin just to get started. This is how you build AI systems that last.

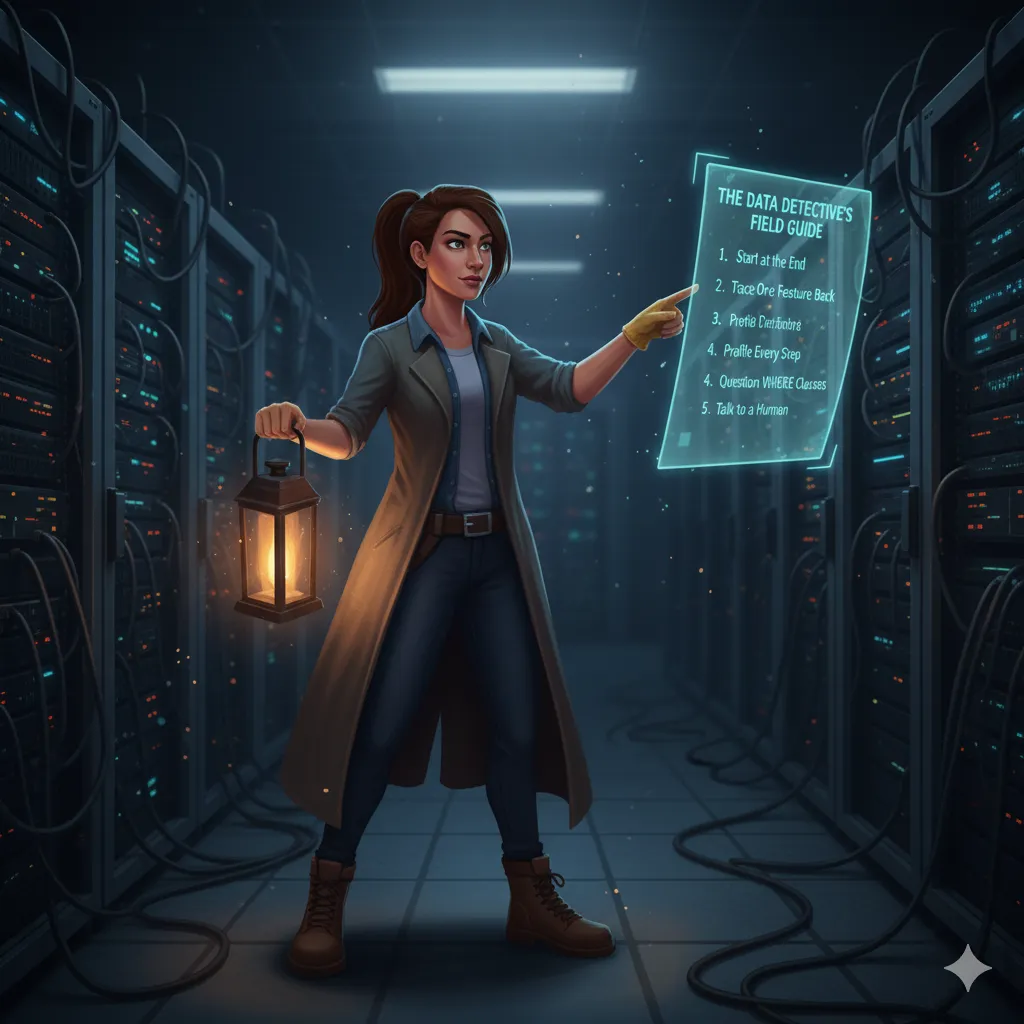

The Data Detective’s Field Guide

So how do you find these issues in your own projects? It’s not about luck. It’s about being methodical.

Your Starter Checklist

- Start at the End: Don’t start with the raw data. Start with the final table the model consumes and question what each feature claims to be.

- Trace One Feature Back: Pick one important feature and trace its lineage all the way to the source. Document every single transformation.

- Profile Every Step: After every

JOINorGROUP BY, run a quickCOUNT(*)andCOUNT(DISTINCT key). Do the numbers make sense? - Question the

WHEREClauses: Look for hardcoded dates and magic numbers. Ask “why?” for every single one. - Talk to a Human: Find the person closest to the data and ask them, “Tell me the story of this table. What are the gotchas?”

My full process is a kind of forensic analysis. I am reading the code to understand the story the original developer was trying to tell. I am looking for red flags like nested logic, unexplained “magic numbers,” or the overuse of DISTINCT, which is often just a bandage on a deeper wound. But the code only tells half the story. You have to understand the human process that creates the data. That knowledge is priceless.

Final Thoughts from the Trenches

At the end of the day, the lesson I keep learning is this: all the hype around AI is just noise if you don’t get the fundamentals right. The hard-won lessons from years in data warehousing and BI aren’t obsolete. They’re more critical than ever. Clean your data. Understand its context. Validate your logic. It’s not as sexy as a neural network, but it’s the only thing that actually works.

For the Folks in the Corner Office

- Your teams need the time and safety to ask “Why?” and pull on these threads. A culture that prioritizes speed over quality will always be building on sand. Good data work accelerates AI initiatives, it doesn’t slow them down.

- Good data architecture isn’t just about speed; it’s about cost optimization. That five-hour data prep job was burning cash. Pushing computation into a system like Snowflake is often cheaper and smarter.

- Ask about backtesting. If your team can’t clearly explain how a model’s performance is measured, you may have a very expensive problem hiding in plain sight.

For My Fellow Data Wranglers

- This is our burden and our gift. We have to be the ones to spot these things. Champion best practices, like decoupling data prep from modeling. Your future self will thank you.

- A thorough code review isn’t just about style. It’s about sniffing out these deep logical flaws. Ask, “How could this break?”

- If you inherit a model with no backtesting, make building that framework your very first priority. It’s the only way to prove your value.

For the Up-and-Comers

- Be relentlessly curious. At every step, look at the data. Does the row count make sense? Did that join do what you expected?

- Don’t just write code. Become a data detective. The best data scientists I know are the ones who are most intimate with the messy reality of their data.

- If a function feels like “magic,” stop. Figure out exactly what it does under the hood. Don’t ever assume.

Disclaimer: The opinions expressed on this blog are solely those of the author and do not reflect the views, positions, or opinions of my employer.