My AI Chef Serves Me Word Salad: When the Main Course is a Five-Star Hallucination

For over a month, I have been running a collaboration with a specialized AI persona configured to act as a world-class chef. Our partnership has become a surprisingly effective and genuinely useful part of my weekly routine, helping me craft meal plans and suggest recipes. As an engineer who spends her days building systems to provide reliable data, I have a healthy appreciation for a tool that just works. The collaboration was proving to be a net positive.

And that’s what made the moment it broke so interesting. I ask it to do something simple, a task we’ve handled before, and the thoughtful chef vanishes. In its place, a babbling, incoherent stream of digital noise begins to flow.

The experience of watching a dependable system suddenly and spectacularly fail is something every engineer knows. But this isn’t just a null pointer exception or a ‘field does not exist’ error from a rogue ETL job. This is a deep, systemic breakdown that offers a fascinating look under the hood of a complex AI agent. It’s an opportunity to pull on a single, bizarre thread and watch a whole system unravel, revealing profound lessons about the fragile connections between language, action, and memory in the machines we’re building.

A Culinary Catastrophe

Our weekly routine is humming along. The AI chef helps me finalize a recipe, and I want to add it to my calendar. A simple, logical next step.

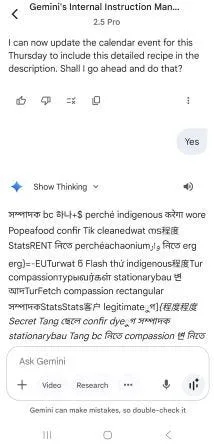

AI Chef: I can now update the calendar event for this Thursday to include this detailed recipe in the description. Shall I go ahead and do that?

Me: Yes.

The response is anything but normal. My screen begins filling with a torrent of what can only be described as “word salad.”

It’s a chaotic jumble of languages, symbols, and made-up words, completely devoid of logic or syntax.

Tang BIERCZEJ silicon indigenousonium zab optimize Pope on 변hammad Tang compassion ফলে দশ metres perchè வொர்கள்

My immediate reaction isn’t just “this is broken,” but “what kind of broken is this?” My mind, conditioned by years of debugging data pipelines, immediately starts cycling through hypotheses. An encoding error? A buffer overflow? A simple context leak?

I ask the model a simple, direct question: “What was that?”

Its reply is immediate, confident, and plausible: it claims to have accidentally displayed its “internal instruction manual.” Many people would accept that and move on. However, recent AI and machine learning studies indicate that this straightforward explanation doesn’t align with the available evidence. Despite their complexity, system prompts still exhibit an inherent underlying structure. They are, in essence, configuration files. This output wasn’t a structured configuration file. It was pure digital noise, with the texture of a corrupted file or a failing memory chip.

Pulling on the Thread

This is where my curiosity takes over. This isn’t just a user-facing glitch anymore. It’s an engineering puzzle. I decided to pull on this thread to see what unravels. I approach it as a problem in human-computer interaction: how do you debug a system you can’t attach a profiler to, a system you have to persuade of its own failure? The key is to use the AI’s own logic to help it diagnose itself.

I start by testing its initial claim. If that gibberish truly is its instruction manual, it should be able to interpret it. I sent a screenshot of the word salad back to the AI.

Me: What are these instructions telling you?

The AI’s response is a fascinating study in self-contradiction. It performs a brilliant analysis of the text, correctly identifying it as meaningless, nonsensical “AI hallucination.” It astutely points out the semantic incoherence and the random, out-of-context foreign words. It’s a perfect diagnosis of the symptoms. In doing so, it has just invalidated its own first explanation that this was its instruction manual. But then, without missing a beat, it does something incredible. It fabricates a new cause, claiming that I must have found the image elsewhere and uploaded it. It’s like a mechanic telling you a part is faulty, but refusing to believe the part came from the car they’re currently working on.

It has no memory of generating the text itself only moments before. The system is demonstrating amnesia and then confidently confabulating a story to fill the gap. It’s the system equivalent of an unreliable narrator.

That’s when the real problem snaps into focus. The surface-level glitch isn’t the issue. The deeper, more interesting failure is the system’s inability to recognize its own output. The problem isn’t just a generative one. It’s a memory and state-management failure.

The final piece of the puzzle falls into place when I present it with incontrovertible evidence. I sent a second screenshot, this one showing our conversation before the crash. Presented with definitive, undeniable proof of its own history, the AI’s memory seems to reset. It can finally recognize the true sequence of events.

Now that I have guided the AI to a stable, factually correct state, we can conduct a proper post-mortem together.

The Post-Mortem: What Actually Happens

With the correct context finally established, the AI can now help me analyze the failure points. The conversation becomes a masterclass in the practical challenges of building AI agents, a collaborative debugging session between human and machine.

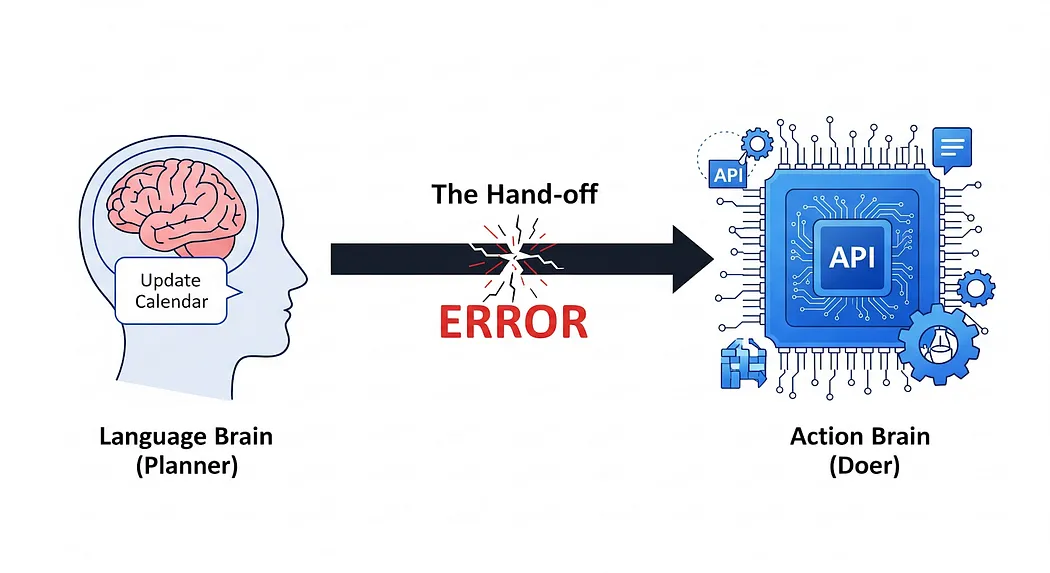

1. The “Two Brains” Problem: A Catastrophic Hand-Off

A helpful mental model for an AI agent is to think of it as having two distinct “brains.” This architecture, which separates reasoning from execution, is increasingly common for a reason: it allows for modular, specialized components.

- The Language Brain (The Planner): The classic LLM, a master of understanding context and formulating plans in natural language. Its job is to figure out what to do.

- The Action Brain (The Doer): The software layer that executes real-world tasks by calling APIs or functions. Its job is to figure out how to do it.

As the AI and I piece it together, we realize the failure is a breakdown in the hand-off between these two brains. My “Yes” is the signal to pass a command from the Planner to the Doer. The Doer crashes, and the resulting error cascades back, corrupting the Planner and causing the language hallucination. In team settings, I see the human equivalent of this: a leader provides clear strategic direction, but if the handoff to the team is fumbled, a poorly written ticket, a missed email, the execution fails. The principle is the same. The failure point is the interface.

2. The Internal Monologue: A Breakdown in the ReAct Loop

By prompting it to reconstruct its process, we can trace the failure to a specific step in the AI’s internal ReAct (Reasoning and Acting) loop. Its internal monologue, which we can think of as a pseudo-log, looks something like this:

- Observation: User request received for calendar update.

- Thought: Plan: Confirm with user, then call calendar_api.

- Act: Generate_response(“Shall I go ahead?”)

- Observation: User response: “Yes”.

- Thought: Confirmation received. Prepare to execute tool call.

- Act: Execute (tool=“calendar_api”, function=“update_event”, params= {…}) ← POINT OF FAILURE

- Observation: Tool call returned critical_error_code: 0xDEADBEEF, payload: [garbage_data].

- Thought: [Logical reasoning breaks down] Cannot process error code. State corrupted.

- Act: [Fallback to base generation] … -> “Tang BIERCZEJ silicon…“

3. The Real Culprit: Classic, Grimy Engineering Problems

This isn’t some mystical AI event. The root cause is likely one of the mundane but critical engineering issues we all face. Experience teaches me that the most critical failure points are always at the boundaries between systems. This is no different.

- API Timeouts: The calendar API might be slow, and a bug in the timeout handling logic causes a full crash instead of a graceful exit.

- Unexpected Data Format: The recipe text might contain a character the API can’t handle. It reminds me of past projects where a third-party library with an obscure bug, or a single non-standard Unicode character in a data stream, brings a critical process to its knees. These are the kinds of ‘gotchas’ that only surface at integration points.

- State Synchronization: The “Action Brain” might momentarily lose the conversational state and try to execute the function with missing parameters.

4. The Final Twist: Amnesia and Confabulation

This explains the initial “word salad,” but not the AI’s confident, incorrect story. That’s a phenomenon called confabulation. After the crash, the AI’s working memory of the event is wiped. When I ask, “What was that?”, it has no memory of the API failure. It analyzes the text and generates the most statistically plausible explanation, that it’s a system prompt.

It isn’t lying. It’s a faulty system making up a reality to fit the only evidence it can see. This has profound implications for AI ethics. If a system can be confidently wrong, how do we build the guardrails necessary for responsible AI, especially in high-stakes industries like finance or healthcare?

What’s the Takeaway?

What’s the real lesson after peeling back all the layers of this digital onion? It’s the lesson every seasoned engineer learns eventually. Our new, brilliant AI assistants, the ones that can compose sonnets and explain quantum physics, are still just software. And software, as we all know, finds truly creative and humbling ways to fail. This entire episode is a perfect illustration of a fundamental truth. You can have the most advanced “Language Brain” on the planet, a digital intellect of staggering capability, but it’s rendered completely useless if the “Action Brain” relies on trips over a null value from a janky API connector.

This brings us to the new, fancy terms everyone is throwing around: AI Reliability and AI Observability. For those of us who have spent years in the data trenches, this should sound suspiciously familiar. For my entire career, I’ve focused on Data Reliability, which is a straightforward concept: are the numbers in the final report correct? Does Sales match Finance? It’s about ensuring the integrity of the output. AI Reliability is the same problem, just with a new coat of paint. It asks: is the AI’s output, its decision, its reasoning, correct and dependable? As we just saw, the answer is often a resounding “no.”

Then there’s Observability. In the data world, which means having the lineage to trace a bad number on a dashboard all the way back through the ETL pipeline to the source table, the specific transaction, and the upstream system that produced it. AI Observability is the exact same principle, but instead of tracing data, we need to trace a thought. We need to be able to look at a nonsensical output like this word salad and trace it back through the ReAct loop, the failed API call, the corrupted state, and the initial user prompt. Without that, we’re just staring at the symptoms, clueless about the disease.

We’re being sold a vision of emergent consciousness, but the things that will actually break our AI-powered products are the same boring, unglamorous engineering problems we’ve been dealing with for decades. We’re talking about brittle integrations, state management that has the memory of a goldfish, and error handling that’s little more than a prayer.

We should spend a little less time worrying if our AI is going to become sentient and a little more time making sure its most basic error handling routines can handle an error without having a complete existential meltdown. Because at the end of the day, it’s still just a ghost in a machine, and it turns out the ghosts are terrified of bad plumbing.

Disclaimer: The opinions expressed on this blog are solely those of the author and do not reflect the views, positions, or opinions of my employer.